Overview

Organisation: Marine engineering service

Structure: 26 employees

Goal: Augment and automate project administrative processes

Solution: AI agent development with OpenAI to assist engineers, administrators, and project managers in project documentation.

Technology: OpenAI Agent SDK and Assistant API, Lovable, Replit, GitHub.

This project builds on five process improvements for a marine engineering service designed in 2024, to augment project administrative processes undertaken by engineers, administrators, and project managers, using today’s agentic AI technology, improving project timelines and quality outcomes and reducing cost of labour from searching for information, fixing errors, or redoing work. The OpenAI Agent SDK and Assistant API backend workflows were combined with a Lovable interface and Replit backend.

Analysis

Engineering project handover in the 2024 case study context was characterised by high document volumes, strict compliance requirements, and coordination across engineers, administrators, and project managers. While the 2024 process optimisation initiative reduced duplication and improved efficiency through structured workflows and enablement tools, it exposed a persistent structural issue: compliance knowledge remained tacit, fragmented, and manually enforced. Critical rules around document completeness, naming, versioning, signatures, and dates were embedded in individual experience, spreadsheets, and procedural manuals. As workload increased or staff changed, this reliance on human recall created variability, rework, approval delays, and latent risk. Administrators carried a disproportionate cognitive burden, engineers received late-stage feedback, and project managers relied on trust rather than objective assurance. With generative AI maturing and guidance from PMI, McKinsey, and Engineers Australia emphasising augmentation over automation in high-risk professional settings, the opportunity emerged to externalise compliance logic into a consistent, role-aware system. Engineering handover packages lacked a consistent, scalable mechanism to enforce compliance rules across roles, resulting in avoidable rework, approval delays, and risk exposure. A solution was required to formalise and operationalise compliance knowledge within the workflow, augmenting engineers, administrators, and project managers without removing human accountability.

Objectives

Formalise compliance knowledge by externalising document, naming, versioning, and approval rules into machine-readable structures rather than relying on tacit human expertise.

Reduce rework and approval delays by providing early, role-appropriate feedback to engineers and administrators before handover packages reach final review.

Standardise quality and auditability of engineering handover packages across projects, staff, and workload conditions.

Augment multi-role workflows by supporting engineers, administrators, and project managers within a single, role-aware system while preserving human judgement and accountability.

Establish a scalable foundation for AI adoption that aligns with professional-services risk constraints and can evolve from assistance to augmentation without disruptive automation.

User Personas

Engineer

Produces technical documentation and needs rapid feedback on completeness and compliance.

As an engineer, I want to upload my documents and instantly see if they meet required rules, so that I can correct issues early and avoid rework.

As an engineer, I want clear, specific feedback on what is missing or non-compliant, so that I can focus on technical quality rather than remembering administrative rules.

As an engineer, I want confidence that my handover inputs are structurally sound before submission, so that approvals are not delayed for avoidable reasons.

Administrator

As an administrator, I want the system to automatically audit all documents against compliance matrices, so that I do not rely on manual checklists or tacit knowledge.

As an administrator, I want the tool to flag errors, gaps, and inconsistencies consistently across all work orders, so that quality is standardised regardless of workload or staff experience.

As an administrator, I want to compile a complete handover package with system validation, so that I can submit it with confidence and reduced cognitive effort.

Collates, validates, and packages documents against strict rules and naming conventions.

Project Manager

As a project manager, I want a clear, consolidated view of handover package readiness, so that I can assess approval status quickly.

As a project manager, I want assurance that compliance checks have been applied uniformly, so that my approval decision is based on objective evidence rather than trust alone.

As a project manager, I want to focus on risk, outcomes, and delivery decisions, so that administrative quality does not distract from project leadership.

Reviews compiled packages and makes approval decisions with confidence in their integrity.

Strategy

The strategy for the engineering admin agent draws on research and observation published between 2023 and 2025 by PMI, McKinsey, Engineers Australia, Thomson Reuters Institute, and PM-Partners, highlighting that generative AI delivers the greatest value when applied to high‑impact, low-complexity tasks such as compliance checking and administrative coordination. The 2024 case study identified these tasks as labour‑intensive, error‑prone, and in some aspects reliant on individual memory. In response, the 2025 design deliberately targets the assistance and augmentation stages of AI maturity, embedding GenAI within existing workflows rather than attempting wholesale automation. Consistent with guidance on safe AI adoption in engineering (see Engineers Australia, 2025), the agent enforces rules, flags risks, and provides structured feedback while preserving human accountability. This approach reflects both industry caution around high‑risk settings and the opportunity for SMEs to gain disproportionate value by formalising tacit knowledge into scalable systems.

Solution Design

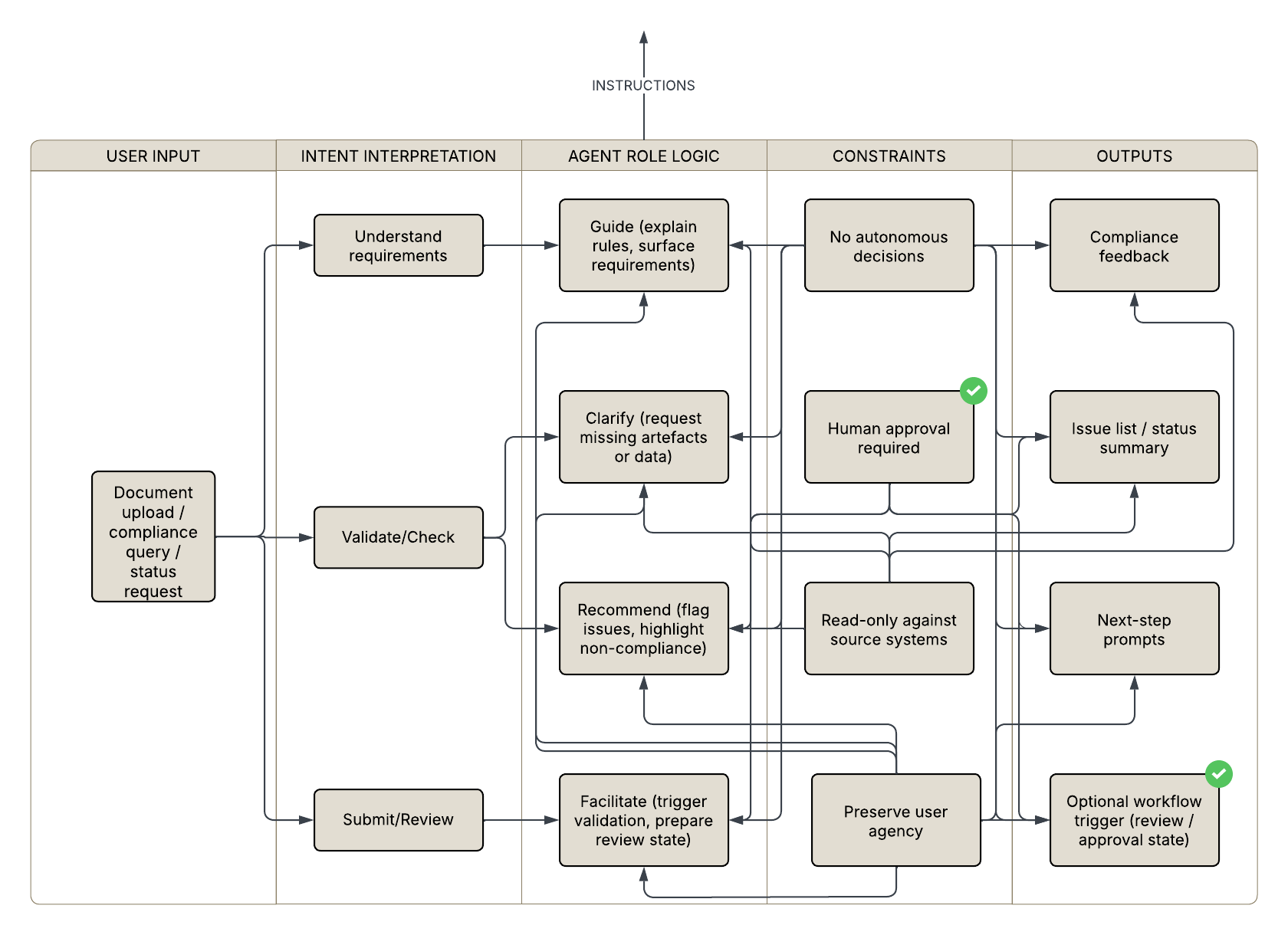

AI Agent Scope & Role

The engineering admin agent was designed as a role-aware, compliance-focused assistant that augments rather than replaces human decision-making across engineering handover workflows. Its scope is deliberately constrained to high-impact, low-judgement tasks: validating document compliance, checking handover package completeness, and surfacing risks early. For engineers, the agent provides immediate feedback during document preparation. For administrators, it performs consistent, repeatable compliance audits and package compilation. For project managers, it presents a consolidated view of readiness and outstanding issues to support approval decisions. This scoped design reflects lessons from the 2024 project, where efficiency gains plateaued due to reliance on tacit knowledge and manual checking, and aligns with best-practice guidance for safe AI use in high-risk professional settings.

Instruction Logic

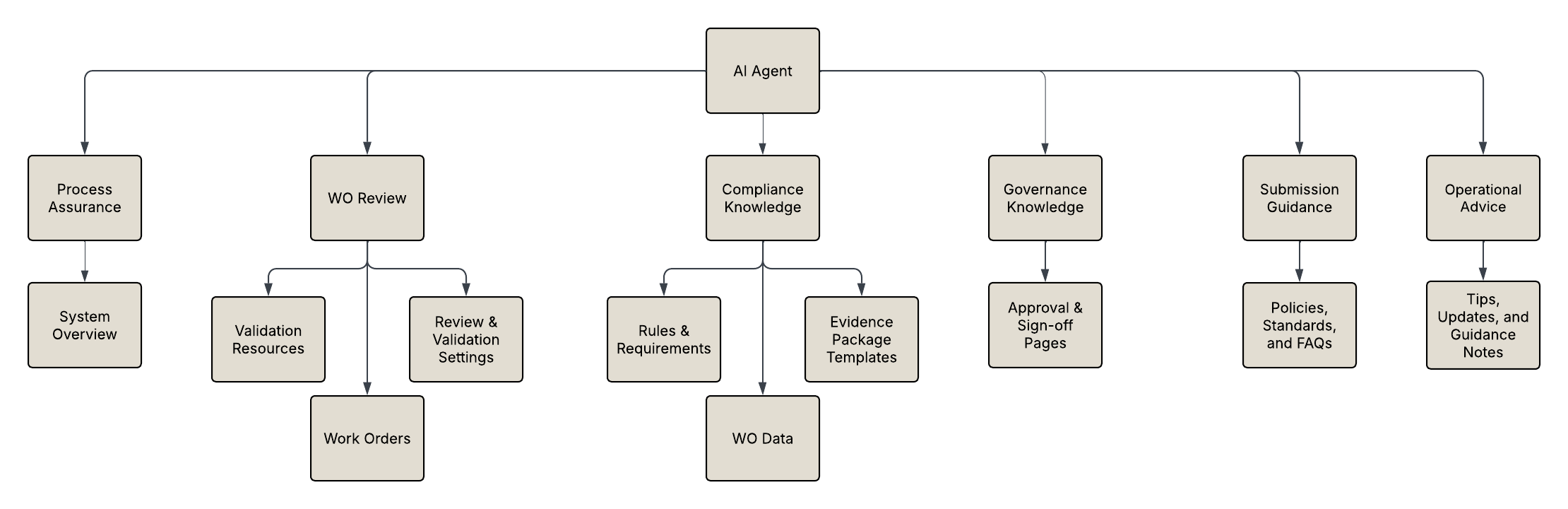

Data & Knowledge Preparation

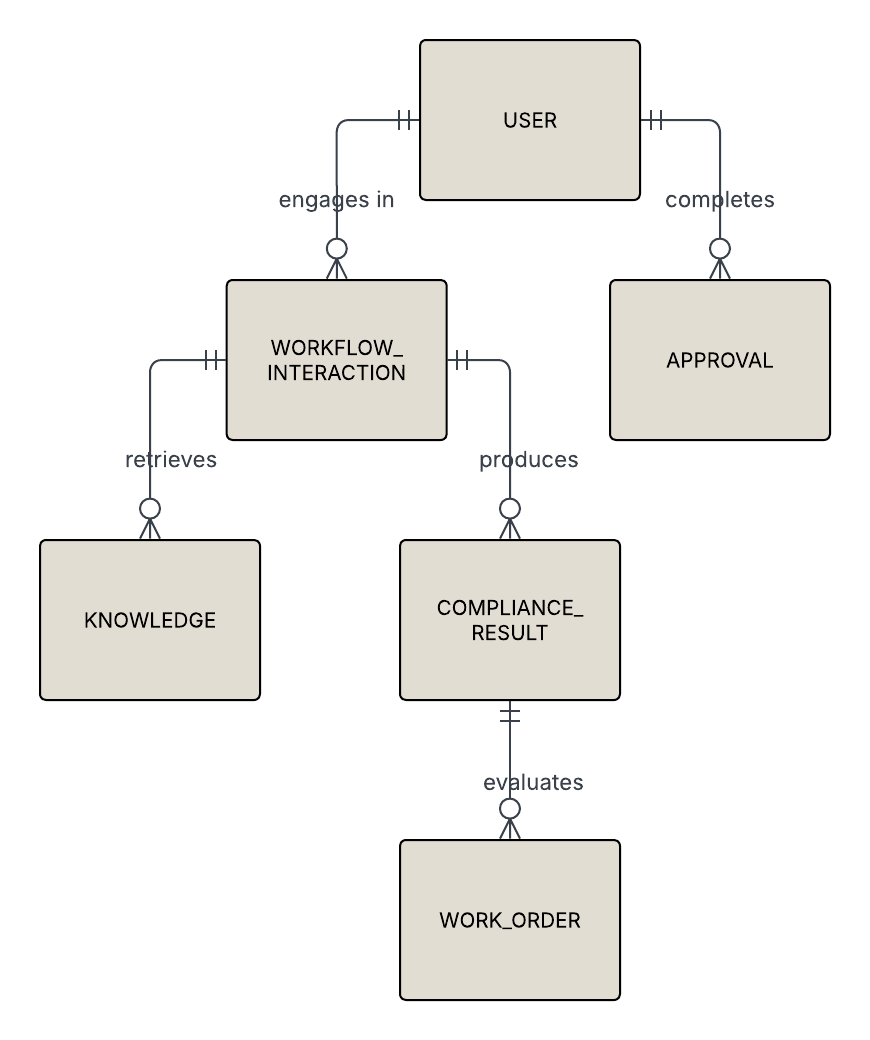

A central design decision was to externalise compliance knowledge into structured, machine-readable assets. The work order was defined as the core business entity, surrounded by document schemas, compliance matrices, and explicit naming and versioning rules.

These assets were derived indirectly from the manual checklists, SOPs, and experiential knowledge surfaced during the 2024 process optimisation work. By separating rules from interface and application logic, the solution ensures consistency, auditability, and ease of update as standards evolve. This reframes administrative expertise as a reusable organisational asset rather than an individual dependency.

Data Model

Knowledge Structure

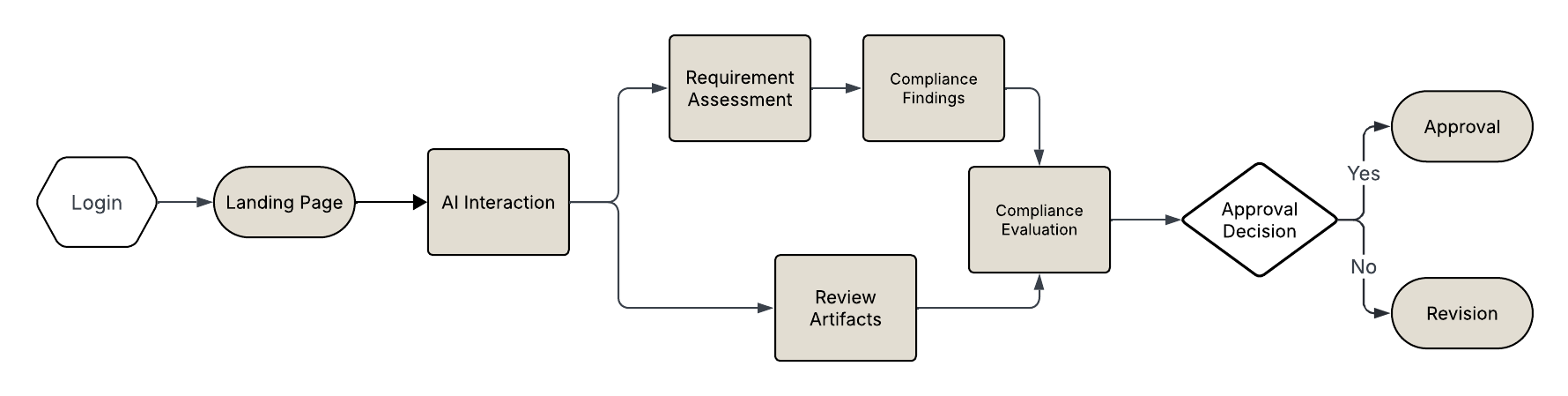

Interaction Design

Interaction design follows a role-segmented model, ensuring each user encounters only the information and actions relevant to their stage of the workflow. Engineers interact primarily through upload-and-review feedback loops, enabling early correction. Administrators use audit and compilation interactions that replace manual cross-checking with system-validated results.

Project managers engage through a summary-led view highlighting compliance status and residual risk. This design builds directly on the 2024 finding that cognitive load and context-switching were key contributors to error. Conversational and feedback-driven interactions are prioritised over static dashboards, supporting flow rather than interruption.

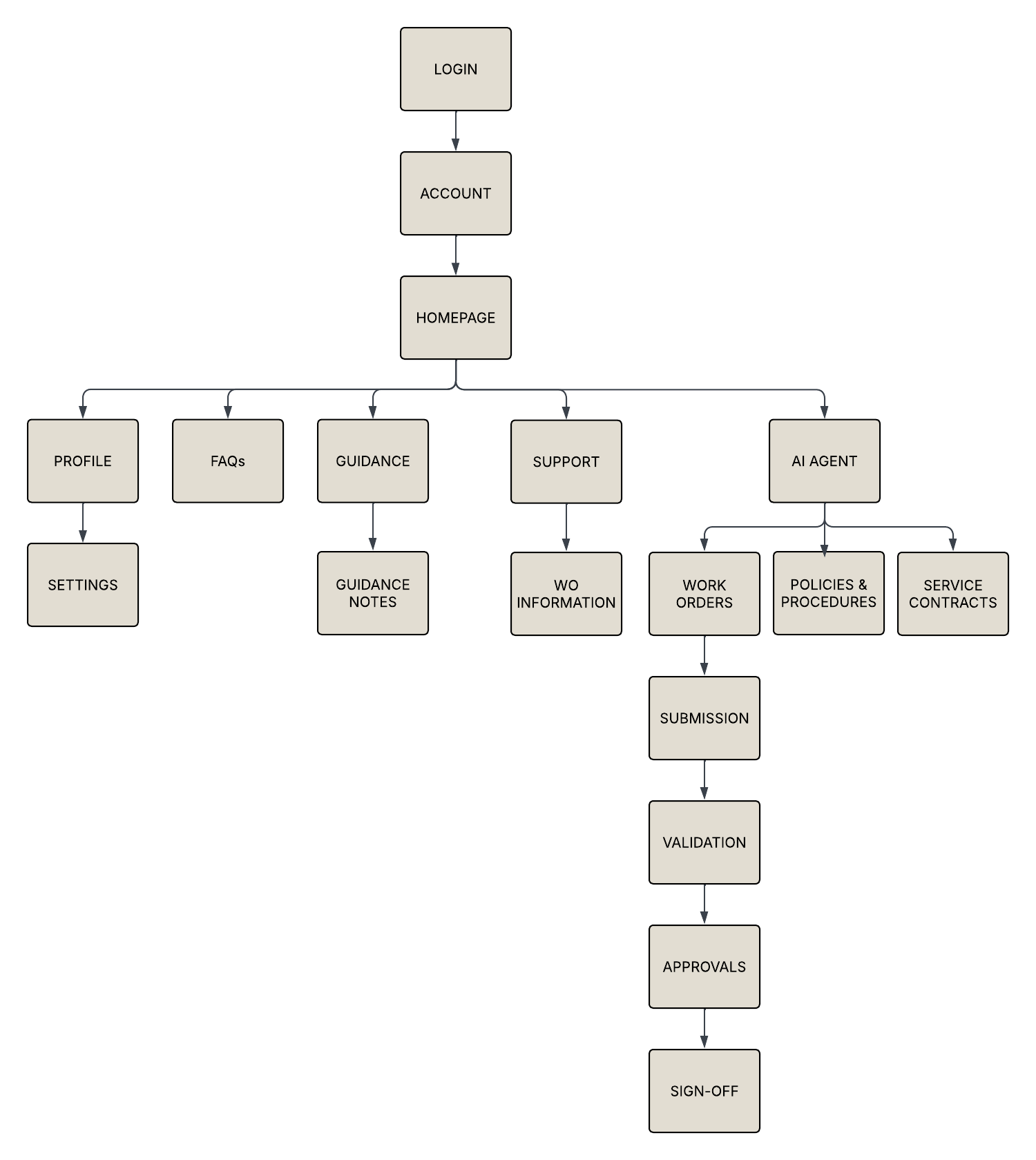

Information Architecture

User Flow

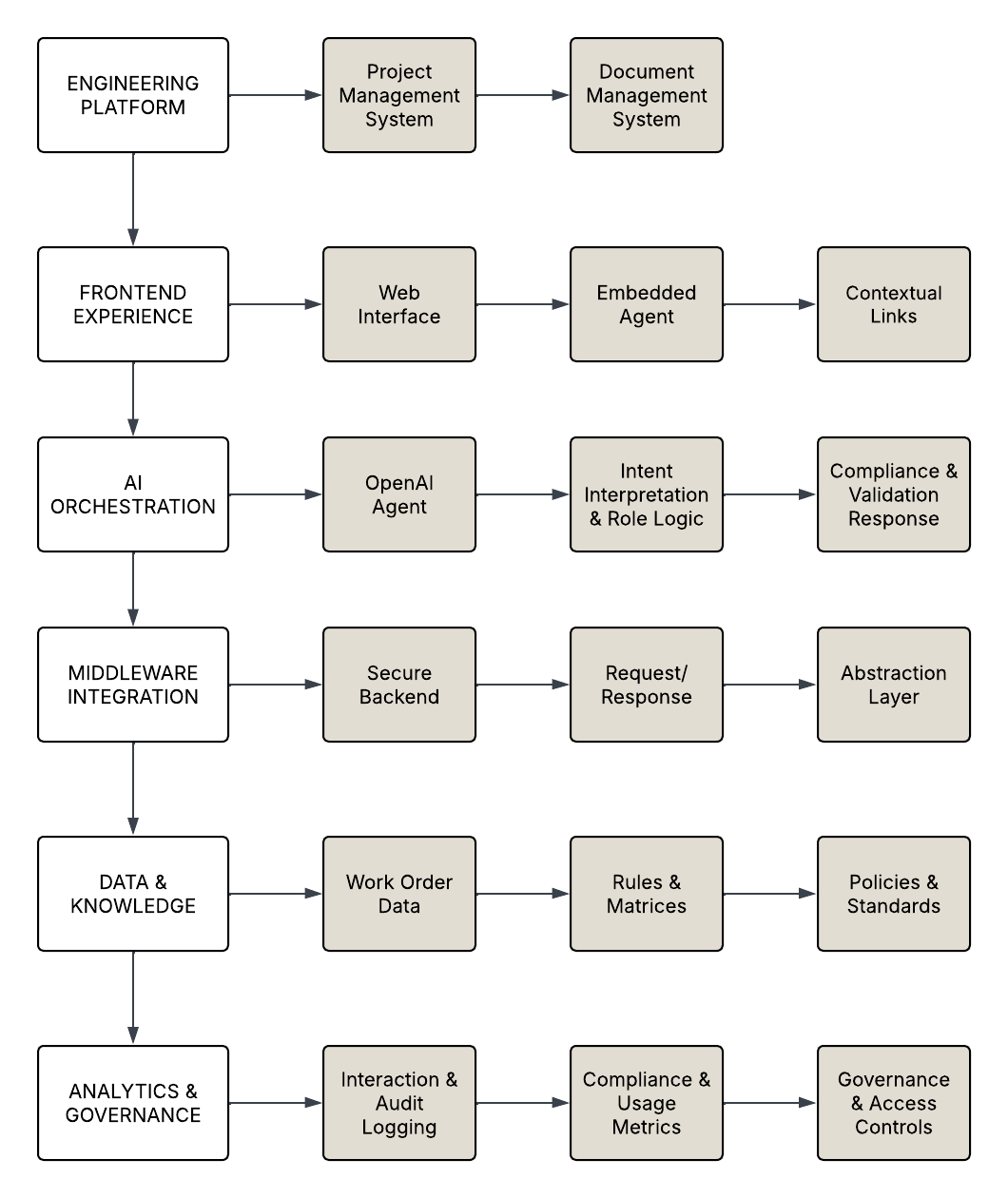

Technical Integration

The solution is architected as a modular AI agent layered over existing document workflows rather than a tightly coupled system replacement. Compliance logic and knowledge assets are loaded independently from the interface, enabling portability across environments and future integration with live document management systems.

The agent operates as a validation and orchestration layer, not a system of record, preserving compatibility with established engineering toolchains. This method of decoupling AI logic from front-end experience ensures scalability, security, and adaptability as organisational AI maturity increases.

Analytics and governance were implemented at an operational level, supporting auditability, role-based control, and approval traceability. While advanced analytics and AI usage reporting were not fully developed, the system captured and persisted compliance states and workflow decisions as a functional governance layer.

Tech Stack

Results

In testing, the engineering admin agent demonstrated consistent detection of missing, incorrectly named, or improperly versioned documents across multiple simulated work orders. Compared to the 2024 baseline, the solution reduced reliance on manual checklists and significantly improved assurance of package completeness prior to approval. Importantly, it preserved human decision‑making while standardising the quality of inputs.

Use Case 1: Engineering

For engineers, the agent provides immediate validation of uploaded documents against known requirements. This reduces downstream rework and shortens feedback loops identified as problematic in the 2024 case study. Engineers can focus on technical quality rather than administrative recall, improving overall productivity.

Use Case 2: Administration

Administrators benefit from automated auditing and compilation, replacing time‑consuming manual cross‑checks described in the original process optimisation project. The agent acts as a consistent second reviewer, reducing error rates and cognitive fatigue.

Use Case 3: Project Management

Project managers gain a consolidated view of package readiness, enabling faster, more confident approvals. This directly addresses the prior reliance on trust in administrative processes rather than transparent evidence.

Limitations

The prototype relies on predefined compliance rules and does not yet integrate live operational systems. It also assumes document formats are standardised. Finally, organisational change management remains outside the scope of the technical solution.

Future Directions

Future iterations of the engineering admin project would focus on strengthening analytics, interoperability, and controlled automation while maintaining a compliance-first approach. Priority enhancements include implementing aggregated analytics dashboards to surface trends in non-compliance, rework frequency, and approval cycle times across work orders. Deeper AI interaction logging could support continuous improvement of guidance quality and identify recurrent knowledge gaps in engineering teams. Integration with live document management and project systems would reduce manual upload steps while preserving read-only constraints. Over time, selected low-risk actions such as automated pre-validation at upload or templated revision checklists could be introduced under explicit human approval.

Summary

The 2025 engineering admin agent extends the 2024 engineering business improvement project from process optimisation into AI‑assisted compliance enforcement. By externalising rules, structuring knowledge, and designing for multiple roles, the solution demonstrates a practical pathway for SMEs to adopt GenAI responsibly. The project reinforced the importance of defining core business entities before introducing AI, externalising logic rather than embedding it in prompts, and designing explicitly for human‑AI collaboration rather than replacement.

Bibliography

Engineers Australia (2025). The impact of AI and generative technologies on the engineering profession. Engineers Australia, January 2025.

McKinsey (2025). Superagency in the workplace: Empowering people to unlock AI’s full potential. Mayer, H., Yee, L, Chui, M., and Roberts, R. writing for McKinsey & Company, January 2025.

PM-Partners. Generative AI for project managers: Transforming the way you work. Dodsworth, Q. writing for PM-Partners, 15 August 2024.

Project Management Institute (PMI, 2023). Shaping the future of project management with AI. PMI, October 2023.

PMI (2024). Pushing the limits: Transforming project management with GenAI innovation. PMI, September 2024.

Thomson Reuters (2025). 2025 Generative AI in professional services report: Ready for the next step of strategic applications. Thomson Reuters Institute, 7 April 2025.